Automating Mathematics

PolyMath, Type Theory and Learning

Department of Mathematics

Indian Institute of Science

Bangalore

Goals

- Equip computers with all the major capabilities used by Mathematicians and the Mathematics community in the discovery and proof of Mathematical results and concepts.

- Beyond experimentation/computation/enumeration.

- We must have, if necessary invent, objective measures for the different capabilities.

- Our attempt is to build a bridge to the highly successful AI systems and concepts: AlphaZero, Reinforcement learning, Representation learning, Natural Language Processing, GANs etc.

What computers can do

- Numerical computation.

- Enumeration.

- Symbolic algebra.

- Exact real number arithmetic.

- Linear programming.

- SAT solvers.

- Compact Enumeration.

Some Computer-Assisted Proofs

- Some major results have been proved with computer assistance:

- Four colour theorem.

- Kepler conjecture.

- Boolean Pythagorean triples problem.

- Existence of Lorenz attractor.

- The $290$ theorem for integral quadratic forms.

- We will focus on a less well-known result, but which has some useful features.

Homomogeneous length functions on Groups

A PolyMath adventure

The PolyMath 14 participants

- Tobias Fritz, MPI MIS

- Siddhartha Gadgil, IISc, Bangalore

- Apoorva Khare, IISc, Bangalore

- Pace Nielsen, BYU

- Lior Silberman, UBC

- Terence Tao, UCLA

- On Saturday, December 16, 2017, Terrence Tao posted on his blog a question, which Apoorva Khare had asked him.

- Six days later, this was answered in a collaboration involving several mathematicians (and a computer).

- This the story of the answer and its discovery.

Is there a homogeneous, (conjugacy invariant) length function on the free group on two generators?

Length functions

- Fix a group $G = (G, \cdot)$, i.e. a set with an associative product with inverses.

- A pseudo-length function $l: G \to [0, \infty)$ is a function such that:

- $l(e) = 0$.

- $l(g^{-1}) = l(g)$ , for all $g \in G$.

- (Triangle inequality) $l(gh) \leq l(g) + l(h)$, for all $g,h\in G$.

- A length function is a pseudo-length function such that $l(g) > 0$ whenever $g\neq e$ (positivity condition).

- A pseudo-length function $l$ is said to be conjugacy invariant if $l(ghg^{-1})=l(h)$ for all $g, h \in G$.

- $l$ is said to be homogeneous if $l(g^n) = nl(g)$ for all $g \in G$.

- On the additive group $(\mathbb{Z}^2, +)$ of pairs of integers, a (conjugacy invariant) homogeneous length function is given by $l_{\mathbb{Z}^2}((a,b)) = |a| + |b|$.

Free group $\mathbb{F}_2$ on $\alpha$, $\beta$

- Consider words in four letters $\alpha$, $\beta$, $\bar{\alpha}$ and $\bar{\beta}$.

- We multiply words by concatenation, e.g. $\alpha\beta\cdot\bar{\alpha}\beta = \alpha\beta\bar{\alpha}\beta$.

- Two words are regarded as equal if they can be related by cancellating adjacent letters that are inverses, e.g. $\alpha\beta\bar{\beta}\alpha\beta = \alpha\alpha\beta$.

- This gives a group; e.g. $(\alpha\bar{\beta}\alpha\beta\beta)^{-1} = \bar{\beta}\bar{\beta}\bar{\alpha}\beta\bar{\alpha}$.

- Ignoring order of letters in a word $g$ gives an element $\bar{g}=\alpha^k\beta^l\leftrightarrow (k, l)\in \mathbb{Z}^2$, e.g. $\alpha\beta\beta\alpha\bar{\beta} \mapsto (2, 1)$; $l(g) = l_{\mathbb{Z}^2}((k, l))$ gives a pullback homogeneous, conjugacy-invariant pseudo-length on $\mathbb{F}_2$.

The Main results

- Question: Is there a homogeneous length function on the free group on two generators?

- Answer: No;

- In fact all homogeneous pseudo-lengths are pullbacks from Abelian groups.

First reductions

- (Fritz): Homomogeneous implies Conjugacy invariant.

- (Tao, Khare): $l(g^2) = 2l(g) \forall g\in G$ implies Homogeneity.

Finding bounds: contradict positivity?

- Fix $l$ homomogeneous pseudo-length function.

- The triangle inequality gives bounds on $l(gh)$ in terms of $l(g)$ and $l(h)$.

- Homogeneity gives a bound on $l(g)$ in terms of a bound on $l(g^n)$; also use conjugacy invariance.

- Find bounds in terms of bounds for other elements:

- $l([x, y])$ in terms of $l(x)$ and $l(y)$, where $[x, y] = xyx^{-1}y^{-1}$

- $l(g)$ for fixed $g$ in terms of bounds on generators.

- Combine bounds, including by interpreting in terms of convexity.

Seeking a homogeneous length function

- We can attempt to construct pseudo-lengths that are positive, or at least such that $l([\alpha, \beta])> 0$.

- Can consider quotients larger than $\mathbb{Z}^2$: negative results by Sawin, Silberman, Tao.

- There were also attempted constructions based on functional analysis.

- Given a candidate $l: G \to [0, \infty)$, try to fix this using homogenization and the Kobayashi construction.

- I hoped to combine these with an approach based on non-crossing matchings.

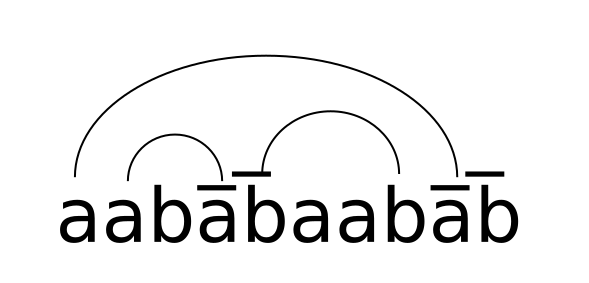

Non-crossing matchings

- Given a word in $\mathbb{F}_2$, we consider matchings such that

- letters are paired with their inverses,

- there are no crossings

- We minimize the number of unmatched letters.

- For $g\in \mathbb{F}_2$, define the Watson-Crick length $l^{}_{WC}(g)$ to be the minimum number of unmatched letters over all non-crossing matchings.

- $l^{}_{WC}$ is unchanged under cancellation (hence well-defined on $\mathbb{F}_2$) and conjugacy invariant.

- It is in fact the maximal conjugacy-invariant length function with generators having length at most $1$.

- However, if $g=\alpha[\alpha, \beta]$, then $l^{}_{WC}(g^2)= 4$, but $l^{}_{WC}(g) = 3$, violating homogeneity.

The great bound chase

- By Tuesday morning, most people were convinced that there are no homogeneous length functions on the free group.

- There was a steady improvements in the combinatorial/analytic bounds on $l([\alpha, \beta])$.

- These seemed to be stuck above 1 (as observed by Khare) - but eventually broke this barrier (work of Fritz, Khare, Nielsen, Silberman, Tao).

- At this stage, my approach diverged.

Computer Assisted proving

- We can recursively compute the matching length $l^{}_{WC}(g)$ for a word $g$.

- This gives an upper bound on all conjugacy-invariant normalized lengths.

- This can be combined with using homogenity.

- Using this, I obtained an upper bound of about $0.85$ on $l(\alpha, \beta)$.

- This was upgraded to a (computer) checkable proof.

- On Thursday morning, I posted an in principle human readable proof of a bound.

- The computer-generated proof was studied by Pace Nielsen, who extracted the internal repetition trick.

- This was extended by Nielsen and Fritz and generalized by Tao; from this Fritz obtained the key lemma:

- Let $f(m, k) = l(x^m[x, y]^k)$. Then $f(m, k) \leq\frac{f(m-1, k) + f(m+1, k-1)}{2}$, i.e., if $Y=\pm 1$ each with probablity $1/2$, $f(m, k) \leq E(f( (m, k - 1/2) + Y(1, -1/2) ))$.

- A probabilistic argument of Tao finished the proof.

The Gross Proof and Beyond

Computing bounds and proofs

- For $g=xh$, $x \in \{\alpha, \beta, \bar{\alpha}, \bar{\beta}\}$, the length $l^{}_{WC}(g)$ is the minimum of:

- $1 + l^{}_{WC}(h)$ : corresponding to $x$ unmatched.

- $\min\{l^{}_{WC}(u) + l^{}_{WC}(v): h = u\bar{x}v \}$.

- We can describe a minimal non-crossing matching by a similar recursive definition.

- A similar recursion gives a proof of a bound on $l(g)$ for $g$ a homogeneous, conjugacy-invariant length with $l(\alpha)=l(\beta)=1$.

- We can also use homogeneity for selected elements and powers to bound the length function $l$.

A Type for Proofs of $l(word)\leq bound$

sealed abstract class LinNormBound(val word: Word,val bound: Double)

final case class Gen(n: Int) extends LinNormBound(Word(Vector(n)), 1)

final case class ConjGen(n: Int,pf: LinNormBound) extends

LinNormBound(n +: pf.word :+ (-n), pf.bound)

final case class Triang(pf1: LinNormBound, pf2: LinNormBound) extends

LinNormBound( pf1.word ++ pf2.word, pf1.bound + pf2.bound)

final case class PowerBound(base: Word, n: Int, pf: LinNormBound) extends

LinNormBound(base, pf.bound/n){require(pf.word == base.pow(n))}

final case object Empty extends LinNormBound(Word(Vector()), 0)

Domain Specific Foundations

- We defined a scala type (class) and its subtypes.

- Objects of this type are proofs of bounds.

- The only way to construct such objects of this type is by constructors of its subtypes (case classes).

- A proof of a bound for conjugacy invariant length functions is correct iff it typechecks (i.e. compiles).

- This is inspired by Propostions as Types in Dependent Type Theories.

- However, we need to load (i.e. run) to check proofs using homogenity, as also to see what is proved.

Merits of the computer proof

- Our proofs, and their correctness are self-contained, and separate from the act of finding the proof.

- Hence the correctness of the proof depended only on the correctness of the code defining proofs, which was tiny.

- Further, while the proof was large, the search space for the proof was enormous.

- Hence the proof had information content beyond establishing the truth of a statement.

- In particular, one can learn from such a proof.

Limitations of the computer proof

- The elements for which we applied homogeneity were selected by hand.

- More importantly, in our representations of proofs, the bounds were only for concrete group elements.

- In particular, we cannot

- represent inequalities for expressions.

- use induction.

- Would want proof in complete foundations - which I formalized a few days after the PolyMath proof.

Discovering proofs?

- If a proof can be represented, it can in principle be discovered by a tree search.

- In practice, there is a combinatorial explosion unless we are selective while branching.

- A good way to choose branches is to look for good intermediate lemmas.

- We consider an example problem: given a set $M$ with a multiplication $mul$ and left and right identitites $e_l$ and $e_r$, deduce $e_l=e_r$.

Underlying Theory

- We have not explained:

- The philosophy behind the domain specific foundations.

- What we mean by the formalized proof.

- What is a good lemma.

- These are all based on (Homotopy) Type Theoretic foundations of mathematics.

(Homotopy) Type Theory

Type Systems, Type Theories, Parts of Speech

- Statements and expressions in programming languages/formal logical systems, like sentences/phrases in natural languages, are formed from simpler ones using the rules of syntax.

- The rules of syntax are determined purely by the types/parts-of-speech of the constituents.

- In natural languages, simple type systems and first-order logic, the set of types is fixed.

- In most programming languages and in higher-order logic, we have type forming rules, allowing types to be formed, say from other types.

Some Types and Rules

- Given types $A$ and $B$, we can form the type of functions $A \to B$.

- The types $\mathbb{0}$ has no terms .

- The type $\mathbb{1}$ has a single term $*$.

- The product type $A \times B$ has terms (corresponding to) pairs $(a, b)$ with $a: A$ and $b : B$.

- The sum (disjoint union) type $A \oplus B$ has terms (corresponding to) terms of $A$ and terms of $B$.

- Most types we form are inductive types - in scala this is the sealed trait/class and case class construction.

Dependent type theories

- In a Dependent type theory/system, types are themselves first-class citizens.

- This means they can be argument and results of functions, assigned to variables etc.

- Type forming rules can involve terms (objects), for example vectors of length $n$ form a type $Vec(A)(n)$.

- Types themselves have types, called universes $\mathfrak{U}$.

- A function $P: A \to \mathfrak{U}$ to a universe is called a type family, e.g. $(n : \mathbb{N}) \mapsto Vec(\mathbb{R})(n)$.

Dependent functions and dependent pairs

- In dependent type theory, we generalize functions to dependent functions with codomain $P: A \to \mathfrak{U}$.

- The type of dependent functions is $\Pi_{a: A} P(a)$.

- If $a: A$ and $f: \Pi_{a: A} P(a)$, we can form $f(a) : P(a)$.

- We can also form the type $\Sigma_{a: A} P(a)$ of dependent pairs $(a : A, b : P(a))$.

- Dependent pairs, Vectors etc. are inductive types or inductive type families.

Propositions as types

- A type $A$ is inhabited if there is a term $a$ with $a : A$.

- We interpret types as propositions, i.e., logical statements.

- If a type $A$ is interpreted as a proposition, a term $a : A$ is interpreted as a proof of (or witness to) $A$.

- In particular, a proposition is true if and only if the corresponding type is inhabited.

- Note that we must be able to form types representing mathematical propositions by the type formation rules.

Combining propositions

- The proposition $A \Rightarrow B$ is represented by $A \to B$.

- The propostion $A\wedge B$ is represented by $A \times B$.

- The proposition $A \vee B$ is represented by $A \oplus B$.

- The proposition $\neg A$ is represented by $A \to \mathbb{0}$.

Let $A$ and $B$ be types, regarded as representing propositions.

Quantifying propositions

- A proposition depending on a variable $x : A$ is represented by a type family $P : A \to \mathfrak{U}$.

- The proposition $\forall x\in A,\ P(x)$ is $\prod_{x: A} P(x)$.

- The proposition $\exists x\in A,\ P(x)$ is $\sum_{x : A} P(x)$.

Type theoretic foundations

- A Type theory is a type system rich enough to replace Set Theory + FOL as foundations for mathematics.

- A term $a$ having a type $A$ corresponds to $a \in A$.

- Rules for forming terms and types, and for determining types, are purely syntactic.

- Nevertheless, the rules for forming types are rich enough that types can play the role of sets - for instance, prime numbers form a type.

- Even more remarkably, propositions and proofs can be expressed in terms of types and terms.

The Proving-Ground Project

Contributors

- Dymtro Mitin

- Tomoaki Hashizaki

- Olivier Roland

- Sayantan Khan

Homotopy Type Theory in Scala

- We have implemented homotopy type theory in the scala programming language.

- The scala types of terms bound the HoTT types in a useful way (scala has subtypes).

- Symbolic algebra is incorporated by allowing scala objects (data structures) to represent HoTT terms.

Useful theorems and proofs

- A theorem with a simple statement but difficult proof is useful.

- A theorem used to prove many other theorems is useful.

- Particularly for applications, we may have an externally determined notion of a priori usefulness.

- With Homotopy Type Theory, and Machine learning techniques such as backward propagation, all these can be naturally captured; we can also try to capture relationships between mathematical objects.

Reinforcement learning: term-type map

- Given an initial distribution $P_0$ on terms, rules for forming terms recursively gives a new distribution e.g. $$P = \alpha P_0 + \beta \mu_*(P) + \gamma \theta_*(P \times P) + \dots$$

- A probability distribution on terms gives one on types by mapping (proof distribution).

- As (inhabited) types themselves are terms, we get a restricted distribution (proposition distribution).

- We can compare these to get a relative entropy.

- We also have a (entropy based) cost associated to the generative model.

Exploration and Learning

- A good lemma is one where the gain in relative entropy from remebering the proof exceeds the cost in generation.

- This is the criterion used in the tree search.

-

This naturally extends in various ways:

- learning weights by using gradient flow (backward propagation).

- using other components for the proposition distribution: from the literature, experimentation, backward reasoning from goals etc.

- basing model on vectors (representation learning).

From the Lean Theorem Prover

package provingground.library

import provingground._

import HoTT._

import induction._

import implicits._

import shapeless._

import Fold._

object nat$decidable_eq {

val value = lambda("'ag_1531296106_1367828936" :: "nat" :: Type)(({

val rxyz = pprodInd.value(piDefn("'aj_117702620" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_751289559" :: Type)(FuncTyp("'c_751289559" :: Type, FuncTyp("'c_751289559" :: Type, Prop))))("nat" :: Type)("'ag_1531296106_1367828936" :: "nat" :: Type)("'aj_117702620" :: "nat" :: Type))))(({

val rxyz = natInd.value.rec(Type)

rxyz

})("punit" :: Type)(lmbda("'h_159188406_990306052" :: "nat" :: Type)(lmbda("'i_854809894_325907817" :: Type)(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(piDefn("'aj_482846896" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_2112253922" :: Type)(FuncTyp("'c_2112253922" :: Type, FuncTyp("'c_2112253922" :: Type, Prop))))("nat" :: Type)("'h_159188406_990306052" :: "nat" :: Type)("'aj_482846896" :: "nat" :: Type))))("'i_854809894_325907817" :: Type))("punit" :: Type))))("'ag_1531296106_1367828936" :: "nat" :: Type)).rec(piDefn("'aj_228954873" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_1303348173" :: Type)(FuncTyp("'c_1303348173" :: Type, FuncTyp("'c_1303348173" :: Type, Prop))))("nat" :: Type)("'ag_1531296106_1367828936" :: "nat" :: Type)("'aj_228954873" :: "nat" :: Type))))

rxyz

})(lmbda("'s_318103601_490050232" :: piDefn("'aj_15078438_215709177" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_517841910" :: Type)(FuncTyp("'c_517841910" :: Type, FuncTyp("'c_517841910" :: Type, Prop))))("nat" :: Type)("'ag_1531296106_1367828936" :: "nat" :: Type)("'aj_15078438_215709177" :: "nat" :: Type))))(lmbda("_" :: ({

val rxyz = natInd.value.rec(Type)

rxyz

})("punit" :: Type)(lmbda("'h_159188406_753078297" :: "nat" :: Type)(lmbda("'i_854809894_590543722" :: Type)(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(piDefn("'aj_1051336192" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_704808476" :: Type)(FuncTyp("'c_704808476" :: Type, FuncTyp("'c_704808476" :: Type, Prop))))("nat" :: Type)("'h_159188406_753078297" :: "nat" :: Type)("'aj_1051336192" :: "nat" :: Type))))("'i_854809894_590543722" :: Type))("punit" :: Type))))("'ag_1531296106_1367828936" :: "nat" :: Type))("'s_318103601_490050232" :: piDefn("'aj_15078438_215709177" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_517841910" :: Type)(FuncTyp("'c_517841910" :: Type, FuncTyp("'c_517841910" :: Type, Prop))))("nat" :: Type)("'ag_1531296106_1367828936" :: "nat" :: Type)("'aj_15078438_215709177" :: "nat" :: Type))))))(({

val rxyz = natInd.value.induc(lmbda("'v_1087445862_593085322" :: "nat" :: Type)(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(piDefn("'aj_202430491" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_374127766" :: Type)(FuncTyp("'c_374127766" :: Type, FuncTyp("'c_374127766" :: Type, Prop))))("nat" :: Type)("'v_1087445862_593085322" :: "nat" :: Type)("'aj_202430491" :: "nat" :: Type))))(({

val rxyz = natInd.value.rec(Type)

rxyz

})("punit" :: Type)(lmbda("'h_159188406_283972159" :: "nat" :: Type)(lmbda("'i_854809894_765942027" :: Type)(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(piDefn("'aj_362557726" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_187883991" :: Type)(FuncTyp("'c_187883991" :: Type, FuncTyp("'c_187883991" :: Type, Prop))))("nat" :: Type)("'h_159188406_283972159" :: "nat" :: Type)("'aj_362557726" :: "nat" :: Type))))("'i_854809894_765942027" :: Type))("punit" :: Type))))("'v_1087445862_593085322" :: "nat" :: Type))))

rxyz

})(("pprod.mk" :: piDefn("'f_858730760" :: Type)(piDefn("'g_84737018" :: Type)(FuncTyp("'f_858730760" :: Type, FuncTyp("'g_84737018" :: Type, ("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))("'f_858730760" :: Type)("'g_84737018" :: Type))))))(piDefn("'aj_1405500650" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_1679528951" :: Type)(FuncTyp("'c_1679528951" :: Type, FuncTyp("'c_1679528951" :: Type, Prop))))("nat" :: Type)("nat.zero" :: "nat" :: Type)("'aj_1405500650" :: "nat" :: Type))))("punit" :: Type)(lambda("'ak_992690145_66345703" :: "nat" :: Type)(({

val rxyz = natInd.value.induc(lmbda("$vuyd_613901509_1138574320" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_51816028" :: Type)(FuncTyp("'c_51816028" :: Type, FuncTyp("'c_51816028" :: Type, Prop))))("nat" :: Type)("nat.zero" :: "nat" :: Type)("$vuyd_613901509_1138574320" :: "nat" :: Type))))

rxyz

})("_" :: ("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_1616709371" :: Type)(FuncTyp("'c_1616709371" :: Type, FuncTyp("'c_1616709371" :: Type, Prop))))("nat" :: Type)("nat.zero" :: "nat" :: Type)("nat.zero" :: "nat" :: Type)))(lambda("'k_621156251_206574004" :: "nat" :: Type)(lmbda("_" :: ("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_1547408363" :: Type)(FuncTyp("'c_1547408363" :: Type, FuncTyp("'c_1547408363" :: Type, Prop))))("nat" :: Type)("nat.zero" :: "nat" :: Type)("'k_621156251_206574004" :: "nat" :: Type)))("_" :: ("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_2022960021" :: Type)(FuncTyp("'c_2022960021" :: Type, FuncTyp("'c_2022960021" :: Type, Prop))))("nat" :: Type)("nat.zero" :: "nat" :: Type)(("nat.succ" :: FuncTyp("nat" :: Type, "nat" :: Type))("'k_621156251_206574004" :: "nat" :: Type))))))("'ak_992690145_66345703" :: "nat" :: Type)))("punit.star" :: "punit" :: Type))(lambda("'v_256405719_429396927" :: "nat" :: Type)(lmbda("'w_566843556_179354281" :: ("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(piDefn("'aj_257559038" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_265527310" :: Type)(FuncTyp("'c_265527310" :: Type, FuncTyp("'c_265527310" :: Type, Prop))))("nat" :: Type)("'v_256405719_429396927" :: "nat" :: Type)("'aj_257559038" :: "nat" :: Type))))(({

val rxyz = natInd.value.rec(Type)

rxyz

})("punit" :: Type)(lmbda("'h_159188406_696790367" :: "nat" :: Type)(lmbda("'i_854809894_317859634" :: Type)(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(piDefn("'aj_1612878706" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_479764664" :: Type)(FuncTyp("'c_479764664" :: Type, FuncTyp("'c_479764664" :: Type, Prop))))("nat" :: Type)("'h_159188406_696790367" :: "nat" :: Type)("'aj_1612878706" :: "nat" :: Type))))("'i_854809894_317859634" :: Type))("punit" :: Type))))("'v_256405719_429396927" :: "nat" :: Type)))(("pprod.mk" :: piDefn("'f_1605051863" :: Type)(piDefn("'g_1443604027" :: Type)(FuncTyp("'f_1605051863" :: Type, FuncTyp("'g_1443604027" :: Type, ("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))("'f_1605051863" :: Type)("'g_1443604027" :: Type))))))(piDefn("'aj_320897960" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_1411998825" :: Type)(FuncTyp("'c_1411998825" :: Type, FuncTyp("'c_1411998825" :: Type, Prop))))("nat" :: Type)(("nat.succ" :: FuncTyp("nat" :: Type, "nat" :: Type))("'v_256405719_429396927" :: "nat" :: Type))("'aj_320897960" :: "nat" :: Type))))(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(piDefn("'aj_138014301" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_2131675862" :: Type)(FuncTyp("'c_2131675862" :: Type, FuncTyp("'c_2131675862" :: Type, Prop))))("nat" :: Type)("'v_256405719_429396927" :: "nat" :: Type)("'aj_138014301" :: "nat" :: Type))))(({

val rxyz = natInd.value.rec(Type)

rxyz

})("punit" :: Type)(lmbda("'h_159188406_1856699345" :: "nat" :: Type)(lmbda("'i_854809894_883946601" :: Type)(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(piDefn("'aj_1842985757" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_1398049368" :: Type)(FuncTyp("'c_1398049368" :: Type, FuncTyp("'c_1398049368" :: Type, Prop))))("nat" :: Type)("'h_159188406_1856699345" :: "nat" :: Type)("'aj_1842985757" :: "nat" :: Type))))("'i_854809894_883946601" :: Type))("punit" :: Type))))("'v_256405719_429396927" :: "nat" :: Type)))("punit" :: Type))(lambda("'ak_992690145_1869838569" :: "nat" :: Type)(({

val rxyz = natInd.value.induc(lmbda("$vuyd_613901509_1095799675" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_2017105073" :: Type)(FuncTyp("'c_2017105073" :: Type, FuncTyp("'c_2017105073" :: Type, Prop))))("nat" :: Type)(("nat.succ" :: FuncTyp("nat" :: Type, "nat" :: Type))("'v_256405719_429396927" :: "nat" :: Type))("$vuyd_613901509_1095799675" :: "nat" :: Type))))

rxyz

})("_" :: ("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_1559678193" :: Type)(FuncTyp("'c_1559678193" :: Type, FuncTyp("'c_1559678193" :: Type, Prop))))("nat" :: Type)(("nat.succ" :: FuncTyp("nat" :: Type, "nat" :: Type))("'v_256405719_429396927" :: "nat" :: Type))("nat.zero" :: "nat" :: Type)))(lambda("'k_217786156_1059286668" :: "nat" :: Type)(lmbda("_" :: ("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_998126858" :: Type)(FuncTyp("'c_998126858" :: Type, FuncTyp("'c_998126858" :: Type, Prop))))("nat" :: Type)(("nat.succ" :: FuncTyp("nat" :: Type, "nat" :: Type))("'v_256405719_429396927" :: "nat" :: Type))("'k_217786156_1059286668" :: "nat" :: Type)))("_" :: ("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_590214278" :: Type)(FuncTyp("'c_590214278" :: Type, FuncTyp("'c_590214278" :: Type, Prop))))("nat" :: Type)(("nat.succ" :: FuncTyp("nat" :: Type, "nat" :: Type))("'v_256405719_429396927" :: "nat" :: Type))(("nat.succ" :: FuncTyp("nat" :: Type, "nat" :: Type))("'k_217786156_1059286668" :: "nat" :: Type))))))("'ak_992690145_1869838569" :: "nat" :: Type)))(("pprod.mk" :: piDefn("'f_14519285" :: Type)(piDefn("'g_88262494" :: Type)(FuncTyp("'f_14519285" :: Type, FuncTyp("'g_88262494" :: Type, ("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))("'f_14519285" :: Type)("'g_88262494" :: Type))))))(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(piDefn("'aj_161697836" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_257271152" :: Type)(FuncTyp("'c_257271152" :: Type, FuncTyp("'c_257271152" :: Type, Prop))))("nat" :: Type)("'v_256405719_429396927" :: "nat" :: Type)("'aj_161697836" :: "nat" :: Type))))(({

val rxyz = natInd.value.rec(Type)

rxyz

})("punit" :: Type)(lmbda("'h_159188406_1711678532" :: "nat" :: Type)(lmbda("'i_854809894_1639579534" :: Type)(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(piDefn("'aj_1427415108" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_888554361" :: Type)(FuncTyp("'c_888554361" :: Type, FuncTyp("'c_888554361" :: Type, Prop))))("nat" :: Type)("'h_159188406_1711678532" :: "nat" :: Type)("'aj_1427415108" :: "nat" :: Type))))("'i_854809894_1639579534" :: Type))("punit" :: Type))))("'v_256405719_429396927" :: "nat" :: Type)))("punit" :: Type)("'w_566843556_179354281" :: ("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(piDefn("'aj_257559038" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_265527310" :: Type)(FuncTyp("'c_265527310" :: Type, FuncTyp("'c_265527310" :: Type, Prop))))("nat" :: Type)("'v_256405719_429396927" :: "nat" :: Type)("'aj_257559038" :: "nat" :: Type))))(({

val rxyz = natInd.value.rec(Type)

rxyz

})("punit" :: Type)(lmbda("'h_159188406_696790367" :: "nat" :: Type)(lmbda("'i_854809894_317859634" :: Type)(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(("pprod" :: FuncTyp(Type, FuncTyp(Type, Type)))(piDefn("'aj_1612878706" :: "nat" :: Type)(("decidable" :: FuncTyp(Prop, Type))(("eq" :: piDefn("'c_479764664" :: Type)(FuncTyp("'c_479764664" :: Type, FuncTyp("'c_479764664" :: Type, Prop))))("nat" :: Type)("'h_159188406_696790367" :: "nat" :: Type)("'aj_1612878706" :: "nat" :: Type))))("'i_854809894_317859634" :: Type))("punit" :: Type))))("'v_256405719_429396927" :: "nat" :: Type)))("punit.star" :: "punit" :: Type)))))("'ag_1531296106_1367828936" :: "nat" :: Type)))

}

NLP: extracting from human literature.

- We aim to extract mathematical objects from the literature, with reasonably high accuracy.

- These can be used for learning, giving terms for generation and types as goals.

- This is a translation problem, except the target language has strong restrictions.

- To extract from natural language, we

- use a natural language parser,

- target an intermediate language (Naproche CNL),

- aim to translate this into HoTT, combing with parsing latex formulas.

Concluding Remarks

- Systems such as AlphaGo Zero show that computers can learn to make judgements, be original etc.

- Hence we can hope that computers acquire a succession of new mathematical capabilities.

- Our first steps should expand the range of ways in which computers help in mathematics.

- A better developed system will contribute to semantic tooling for mathematics.

- Finally, if and when really powerful provers are developed, they can extend the reach of mathematics.